GCD系列 文章之概念向,本文结合开发文档等相关书记来介绍GCD的概念及数据结构,在后面的文章会写实现原理

背景

源码及版本号。所有源码均在苹果开源官网下可下载

源码 | 版本

-|-

libdispatch | 1008.250.7

libpthread | 330.250.2

xnu | 6153.11.26

概念

进程

进程是过去时代的产物。现代操作系统,包括OSX和iOS,都只面对线程进行调度。苹果比其他操作系统提供了更为丰富的操作多线程的API,从这个角度来看,它比其他操作系统高了几个档次。

最初,UNIX被设计为一个多进程的操作系统。进程是系统执行的基本单元,而且是执行过程中所需要的各种资源的容器,这些资源包括:虚拟内存、文件描述符以及其他各种对象。开发者编写顺序程序,从入口点main开始执行直到main函数返回,执行过程是序列化的,容易理解。

然而,这种方法很快被证明太刻板,对于需要并发执行的任务来说灵活性太低。另一个原因是大部分进程早晚都会在I/O上阻塞,I/O操作意味着进程时间片中大部分都放弃了。这对性能有很大的影响,因为进程上下文切换的开销很大。

线程

线程,作为最大化利用进程时间片的方法,应运而生:通过使用多个线程,程序的执行可以分割为表面看上去并发执行的子任务。如果一个子任务发生了阻寒,那么剩下的时间片可以分配给另一个于任务。

而CPU当时的发展却是有限的,即使是多线程的代码,一次也只能运行一个线程。进程中线程的抢占开销比多任务系统对进程抢占的开销要小。因此,从这个角度看,大部分操作系统开始将调度策略从进程转移到线程是有意义的。线程之间切换的开销比较小一一只需要保存和恢复寄存器即可。而相比起来进程的切换还需要切换虚拟内存空间,其中包含很多底层的开销,例如清空cache和TLB(TransIation Lookaside Buffer).

随着多处理器架构,特别是多核处理器架构的出现,线程焕发了新的生机。突然间,两个线程可以真正地同时运行了。多核处理器史是特别适合线程,因为多个处理器核心共享同样的cache和RAM,这为线程之间的共享虚拟内存提供了基础。相比之下,多处理器架构可能会因为非一致的内存架构和cache一致性方面的原因而损失一些性能。

Mach线程定义

线程定义了Mach中最小的执行单元,线程表示的是底层的机器寄存器c状态以及各种调度统计数据,线程的定义在

thread

1 | struct thread { |

线程方案

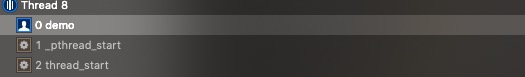

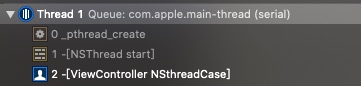

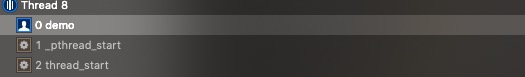

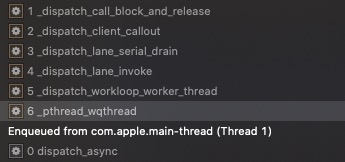

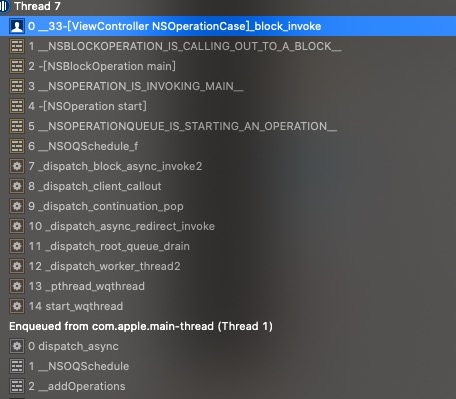

我们常用涉及多线程的API有下面几种:NSThreadpthreadGCDNSOperation,下面我们来看一组调用栈:

pthread

NSThread

GCD

NSOperation

由此可知,NSThread的底层,会使用pthread来创建线程,同样的NSOperation的底层也是使用GCD来实现

pthread

OS X 从Leopard(1.5)开始是一个经过认证的UNIX实现,这意味着OS X 能够完全兼容 Portable Operating System Interface(可移植操作系统接口,POSIX)。

POSIX兼容性是由XNU中的BSD实现的。BSD是对Mach内核的包装。

其中pthread就是 POSIX thread,POSIX线程模型实际上是除了Windows之外所有系统使用的线程API。

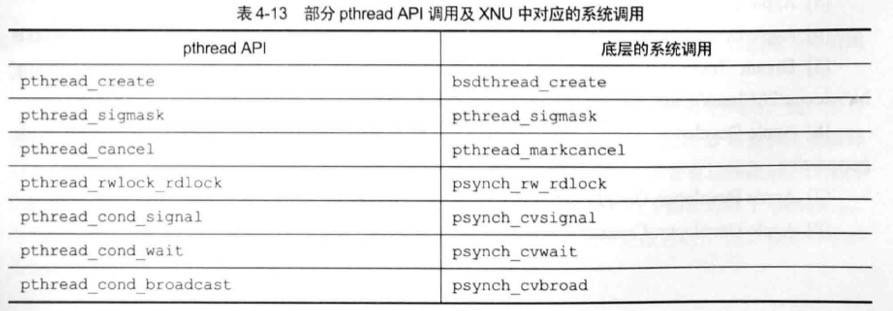

OS X和iOS操作系统采用API映射的方式,将pthread API映射到原生的系统调用,内核通过这些系统调用创建线程。

GCD

Snow Leopard引入了一套新的API用于多处理—— Grand Central Dispatch(GCD)。这套API引入了编程范式的变化:

不要从线程和线程函数的角度思考,而是鼓励开发者从功能块的角度思考。

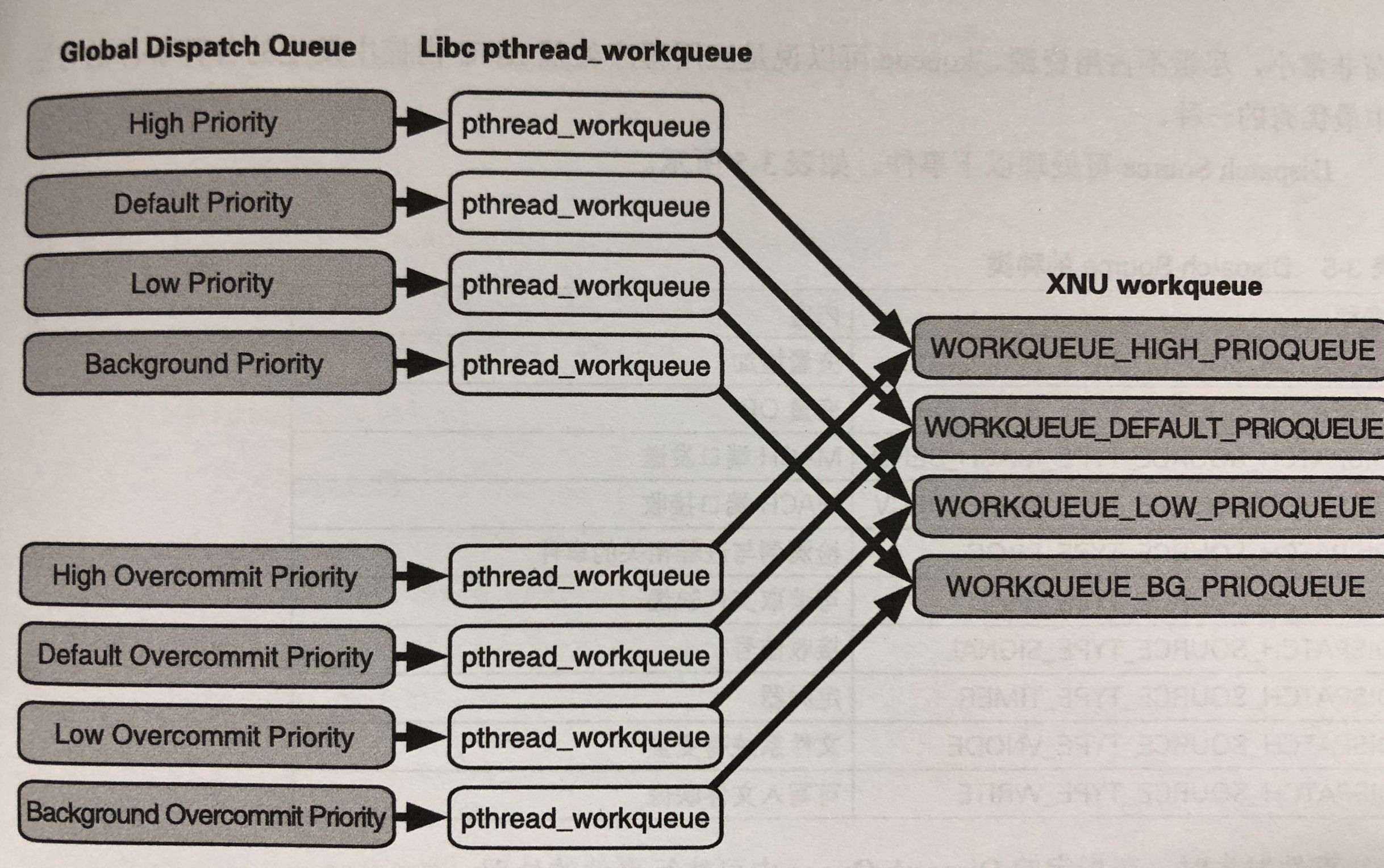

GCD的另一项优势是自动的随着逻辑处理器的个数而扩展。GCD的API本身都是基于pthread_workqueue API实现的,而XNU通过workq系统调用来支持这些API,具体细节请看下一章原理篇。

NSOperation

一种面向对象的设计实现,相比于GCD,NSOperation更关注任务的状态与对任务的控制。

NSThread

NSThread是封装程度最小最轻量级的,使用更灵活,但要手动管理线程的生命周期、线程同步和线程加锁等,底层的实现同pthread。

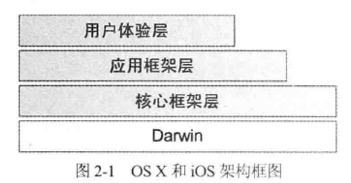

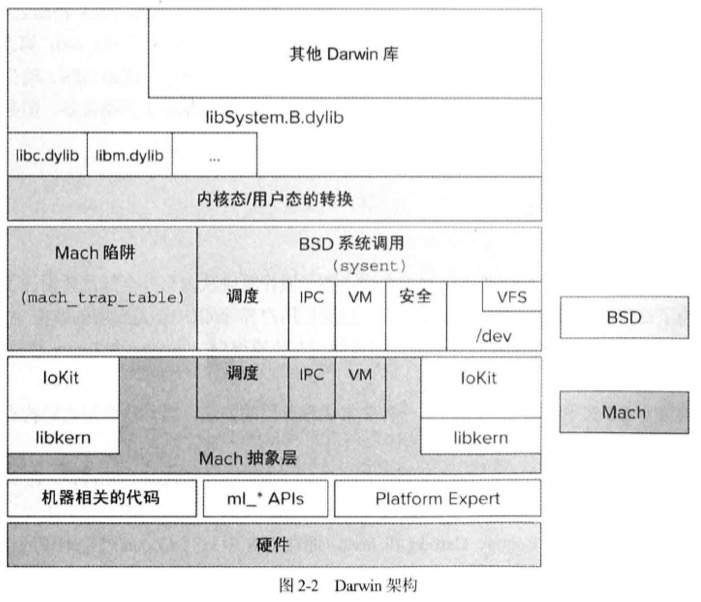

XNU概述

XNU是整个OS X的核心,本身由一下几个组件构成:

- Mach微内核

- BSD层

- libKern

- I/O Kit

Mach

Mach微内核所提供的API非常基础,仅能处理操作系统最基本的职责:

- 进程和线程抽象

- 虚拟内存管理

- 任务调度

- 进程间通信和消息传递机制

Mach本身的API非常有限,本身也不是设计为一个具有全套功能的操作系统,苹果也不鼓励直接使用Mach的API,不过如果没有这些基础的API,其他工作都无法施展,任何额外的功能,例如文件和设备访问,都必须在此基础上实现,而刚刚说的“额外功能”都是有BSD层实现的。

BSD

BSD层建立在Mach之上,也是XNU中一个不可分割的部分,这一层是一个很可靠且跟现代的API,提供了之前提到的POSIX兼容性,BSD提供了更高层次的抽象,其中包括:

- UNIX进程模型

- POSI线程模型

- UNIX用户和组

- 网络协议栈(BSD Socket API)

- 文件系统访问

- 设备访问

libkern

大部分内核都是完全使用C和底层汇编来实现的,而XNU中的设备驱动可以使用C++编写,为了支持C++的运行时环境,XNU包含libkern库,这是一个内建的,自包含的C++库

I/O Kit

设备驱动框架,使用C++环境的好处是:

- 在内核中完整的,自包含的执行环境,使开发者可以更快速构建优雅稳定的设备驱动。

- 使驱动程序可以工作在一个面向对象的环境中。

OS X与iOS架构图

用户态与内核态

内核是一个受信任的系统组件,同时也控制着极为关键的功能,因此,内核的功能和应用程序之间需要一种严格的分离,否则,应用程序的不稳定可能引发种整个系统的崩溃。内核态通过系统调用,向用户态代码提供各种服务,来保证内核的稳定。

kqueue

在GCD的分析过程中,会涉及到kqueue,kqueue是BSD中的内核事件通知机制,一个kqueue指的是一个描述符,这个描述符会阻塞等待一个特定类型和种类的事件发生,用户态的进程可以等待这个描述符,因此kqueue提供了一种用于一个或多个进程同步的简单而高效的方法。

kqueue和对应的kevent构成了内核一步I/O的基础(因此也实现了POSIX的poll(2)和select(2))。这点对于GCD的等待机制也尤为重要。